Nick, for people who haven’t been following your work, can you please bring them up to speed on what you have been up to?

What I’ve been doing for the past few months now is rendering computer generated characters and vehicles into real-life photographs; particularly things from the game Half Life 2. The process involves capturing lighting data of the scene using a reflective sphere and then using that data to illuminate the CG elements. Getting the CG elements to look like they’re really there is the whole point of doing this. Color, contrast and perspective matching is what most of my time is spent on. After coming up with a few good shots, I decided to post them on the Facepunch Forums in the Ideas & Suggestions section, showing everybody what Half Life 2 could look like with High Dynamic Range image-based lighting. I got more feedback than I expected; a lot more. Since I made the first post, the thread has gained over 700,000 views, and has been filled with people who want to know how to do what I do. A few people have really picked things up well, some of whom have offered to help us produce our short film Half Life: Seven Hour War. Today, the thread is an on-going discussion amongst beginners and novices alike who are learning how to composite CG elements into photographs very quickly.

How important do you see your schooling and your photography/art subjects in landing a job? How are you doing at the moment with the workload with Seven Hour War and your presence in the community taking up so much time?

Ever since I started receiving positive feedback and offers from people and even companies, school has appeared less important to me. It hasn’t aided me in any way with getting me to this point in my life, but I still want to get into the film industry, and that isn’t going to be easy if I give school the kick. In my Photography classes my progress isn’t too good. I received a D grade for it last year, and I feel it will be worse this year. Apparently, Photography is more about writing essays and sticking to a schedule than taking actual photos.

Our progress with Seven Hour War is going very slowly. Many of our group members don’t have enough time and are more focused on school work and exams. I myself have all the time in the world. I’m still very eager to rent out the equipment and get filming, but our planning still isn’t finished. I’m starting to wonder if anyone still has the will to make this film anymore.

I don’t spend much time in the community anymore. It’s full of people trying to do what I do, and helping that amount of people out is something I just don’t have time for.

What sort of challenges have you been facing in an attempt to get your project off the ground?

The biggest challenge at the moment is getting the planning done. Putting together a whole story isn’t easy, especially when you have a script to make out of it later. Once our story is done, the next challenge will be the hiring of equipment. The only budget we currently have is the money we make ourselves. But yeah, the biggest challenge now is getting the planning finished. It’s the most uninteresting part of the project to be honest. Only when we start filming and editing will things get interesting.

You clearly have some skill in traditional artwork in addition to CG, how important do you think this is for your 3d work?

CGI will be a critical part of Seven Hour War, so I feel that a great deal of my efforts in the production will go towards the CGI and 3D work. Animation isn’t something I’m good at, though, so we’re getting help from people across the internet. Storyboarding will go hand-in-hand with the scripting stage, mostly with action and battle scenes. It’s extremely difficult to plan an action scene without storyboards or some kind of pre-visualization, so drawing up storyboards will be a big part of the film’s production.

What has been happening in the project for the past several months?

Since we started, we’ve had many offers from people who want to help. One of the key new members of the team is Ryan McCalla who is fantastic at digital 3D animation. Ryan and I have been working together across the net to produce test shots to see how we’d go in the film’s production. Our main writer, Josh Young, has been working heavily on our story lately and says it should be finished in a week or two, at which point the team will get together and read the whole thing before developing storyboards and setting everything in concrete. Only when planning is 100% complete can we hire equipment and begin filming.

What do you hope to achieve with the project?

I suppose it comes down to the question: Why make movies? Most people will say it’s for the money. I, on the other hand, wouldn’t care in the least if I didn’t make a cent. I want to make movies as a career one day; bring my ideas to the screen. I’m still not entirely sure if this is the beginning, but what I do know is that I’m going to discover how much of a Director I’ll make some day. I think I speak for the team when I say we’re doing this for fun. We expect to make no profit at all. You have to admit, a Half Life 2 film would be pretty cool; I guess people like us simply can’t wait!

Can you tell us who else is involved in the project and their roles?

Our primary team (the people who live in the same area) consists of Luke Mundy, Josh Young, Mat Fuller, Michael Acott and myself. Our roles are all much the same at the moment, but we plan to have Luke as our head manager, Josh as our Finance Manager and Head Writer, Mat Fuller as head of prop construction, and Mike as the equipment manager.

Your project draws an interesting parallel with fan films such as ones featured on theforce.net and Machinima, are you involved in any of those communities? Do you think this is something that may start becoming more popular with computer games?

We aren’t involved in any fan film organizations of any kind. I’ve heard of TheForce.net many times before, but for some reason have never taken much interest in it. When you look at the amount of films out there based on computer games, there really isn’t that many at all. I think our film will either crash and burn like many game-based films out there or it will be better than expected and might even show that game-based films can actually turn out well. The one thing I want to be for this project is true to the game. I want everything to abide by the Half Life universe, and I especially want the action scenes to incorporate everything which the game does. I really want you to feel like you’re playing the game when watching the film. This means little dialogue, little music, extremely heavy atmospheres; the works. I see it very clearly in my mind and therefore I’m very persistent with my ideas when the team has to decide how a certain shot is constructed. Many people think that a Half-Life movie (or any game-based film for that matter) wouldn’t work, but I believe that anything’s possible in the movies.

The amount of traffic and replies you’ve received from your work on various forums has been huge to say the least, how have you found peoples reaction to your work and Seven Hour War?

Many people are interested in what I’ve done, there’s no doubt about that. Hundreds of people have downloaded trial versions of the software I use to attempt it themselves. I’m actually rather surprised about people’s reactions to SHW, though, I was expecting a greater reaction to a Half Life 2 short film than some pictures of a Super Soldier in my backyard. My MSN Messenger became so full of contacts that I actually hit the limit and had to open up a new account.

What are the most common questions you get asked in regards to the project and HDR in general?

The most common question has to be “Can you teach me how to do that?” People want to know the details so quickly that it’s unbelievable. It’s like knocking on NASA’s door and asking “How did you build that rocket?” People instantly assume this is something you can learn within a day or two. I’ve been doing this for about 2 or 3 years and I still haven’t quite mastered it. The next most common question is “Where did you get your sphere?” At least this is a realistic question. I was baffled by it for a long time when I started out. I tried Christmas decorations, ball bearings, even hub caps, until finally I bought a 15cm garden ornament and stuck it on a tripod using a threaded hole I cut through the bottom. It’s been perfect ever since, it just needs a good polishing every now and then!

What are the biggest misconceptions you’ve found from people in respect to HDR rendering?

The biggest, by far, is that people assume it’s an easy job. Only when they open up a trial version of the software do they realize it’s a much more complicated job than it appears to be. They get one program, and then they realize they need another one, or they have no idea how to do this or that, and so on. There have been many misconceptions in the past, some crazier than others. There was even one fellow on Fark.com who confidently stated that it’s all done in Photoshop. Then again, you can’t blame someone for getting the wrong ideas, this isn’t exactly the most common activity going around now is it?

How long did it take you to get up to speed on HDRI rendering techniques? Are there any people or sites you can specifically attribute to your learning process?

When I first started out with HDRI based rendering (back when I was using Cinema 4D) I was astonished by what you could achieve with it. I already had a background of about 2 years of rendering and graphics work, so this technology had me extremely excited. I got the hang of it eventually, and then switched to 3DSMax, practically having to start all over again. I came to grips with Brazil, a rendering engine from Splutterfish, and started learning how to create my own light probe images. Only once I started rendering HL2 characters into photos did I get into light and camera matching. If you compare the first few images I posted on the Facepunch forums to the latest ones, you’ll see that things have got a lot better. The biggest contributor to my progress is practice and experimenting. I know it’s an old and boring saying, but practice really does make perfect.

What drew you to creating HDRI specifically? Why not use some other lighting method?

HDRI based lighting is used to illuminate a synthetic scene using lighting captured from real-life; this is something you’ll spend hours doing using omni lights and area lights, and is why I got so interested in HDRI technology in the first place. A calibrated double-sided HDRI map will give you extremely realistic results when rendering synthetic things into a real-life photograph, and it’s something of great satisfaction when you’ve done everything as accurately as you can.

Is HDR truly as quick and easy as people think it is? How fast is it when compared to creating a lighting setup using regular lights? What are the advantages of using a HDR environment for lighting/reflections as opposed to just a normal LDR background with a normal lighting setup?

The actual process of taking photographs and processing a double-sided HDR probe takes me about 10 – 15 minutes. Setting up a scene using the HDR image takes me no longer than a minute from the ground up. It’s like riding a bike, once you know how to do it, you just jump on and off you go. What takes a long time is actually compositing the synthetic characters into the real-life scene. There’s color matching to go through, perspective matching, camera matching, and some times even film grain matching. Most of this stuff is done by eye, as there is no casual way of doing it mathematically.

An HDRI is an image, so it’s a matter of wrapping it around your synthetic scene as a sphere and having it generate light onto the scene. This doesn’t take long at all. Setting up actual lights such as Omnis and Directional lights obviously takes longer, especially if you’re trying to match it with a photograph.

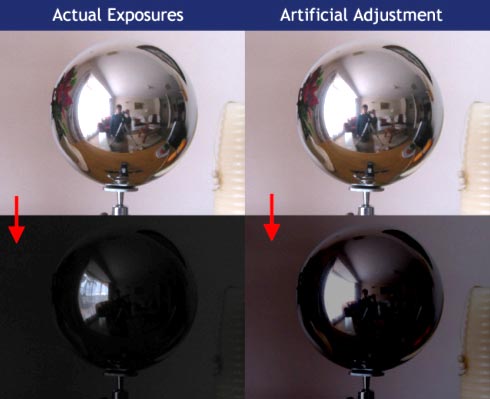

The difference between an HDR image and an LDR image is that an HDR consists of more than one exposure, and will therefore cast multiple levels of light onto your scene. I’ve been asked this question before and prepared an image demonstrating the difference between the down-scaling of a high-dynamic range image and a low-dynamic range image:

Also, when having a synthetic object reflect an LDR image, it’s only reflecting one level of light, and the reflections appear flat and fake.

What process do you use to white balance your HDR environment?

Most of the time I just let the camera’s white balance run on auto. Color and contrast matching I do by eye. I have painted half of my sphere a perfect grey (50% black and 50% white), and photograph it along with the other reference shots. What I do then is create a CG sphere of the same shade next to the real one, and alter the HDR image’s values until both spheres appear identical. This is where you can really be as precise and accurate as you like, and getting a perfect match between the two can produce an extremely realistic result.

Can you give us a breakdown of the tools and hardware you are currently using to create your HDRs?

GCFScape: This is what I use to extract models and textures from the HL2 GCF files.

VPK Tools: This small program converts the textures to TGA files.

MDL Decompiler: This converts the MDL model files into SMD files.

3DSMax SMD importer: I install this into 3DS Studio Max to enable it to read SMD files.

The hardware I’m currently running on is a 3.0ghz HT, 1024mb DDR, a Sapphire Radeon 9600XT 256mb, and an 80gb HDD. The camera I use is a Canon Powershot G2.

What level of quality and resolution does this result in and what size are you planning to output the end product?

The resolution I always use for HDR images is 1024×768, although a blurred 640×480 (for the lighting) delivers the exact same result at a faster rendering speed due to a smaller resolution. For reflections, I input a higher un-blurred resolution of the HDR image into the background channel of a Raytrace shader which is inputted into the material’s reflection slot. For video, we’re aiming at 720×576 for our resolution, but we have had many people suggest we go for the 16:9 format as it is more compatible with today’s uprising of HDTV and widescreen displays.

Have you had any specific problems or challenges creating certain types of HDR?

Yes, the sun is always the biggest challenge. The sun is FAR brighter than anything else in the HDR image and that’s why it never has the effect you expect when using it to illuminate your scene. To overcome this I place an omni light in the CG scene exactly where the sun would be, and this usually solves the problem rather well. Most of the time I decrease the brightness of the HDR image because of the additional light coming from the omni. The best thing to do then is to further match things up between the grey CG sphere and the real one after you’ve placed the omni, and this way you’ll see how close your omni comes to immitating the sun in the final shot. Depending on the lighting conditions of the real-life scene, I sometimes change the color of the omni as well. For example, the sun is more of a yellow golden color in the evening as opposed to a midday sun behind a few clouds.

How do you find CS2 compared to HDRShop for creating HDR images? Any experiences you can share?

CS2 is nice, but it’s expensive as hell, and it doesn’t give your nearly as many options and control as HDRShop does (which is free by the way.) It’s one of those “nice n easy” methods of HDR image processing, and therefore your options are very limited.

How excited are you to about Valve’s HDR capable Lost Coast expansion? Are you one of the lucky few with a computer good enough to run it?

Heh heh, I don’t think so. The only difference I’ve seen with the Lost Coast so far is a glow effect which appears around bright parts of the screen. I think what they mean be the use of HDR technology is that the lighting is of high dynamic range, so the levels of light in the game are far more realistic (such as the sun being as bright as it should be.) I don’t really know much about the Lost Coast so there’s not much can say about it. Personally though, I’m not too interested. I finished Half Life 2 a long time ago.

Have you played any of the other HDR capable games that are out there at the moment such as Far Cry or Splinter Cell: Chaos Theory?

Yeah, I’ve played both Far Cry and Chaos Theory. The graphics of both of them are very impressive, especially Far Cry. Battlefield 2 is also really good. One of the first things I noticed about BF2 is that the light actually bounces around the scene like in real life. Soldiers ran across the sand and their surfaces were affected by light bouncing off the sand. It’s not true indirect illumination, but its damn close, and most definitely makes the game look far more realistic than you’d first expect it to be. I seriously think that within 5 – 10 years time, games will be so realistic that your eyes will be completely fooled.

Thanks for your time and good luck.

Hyperfocal Design

Hyperfocal Design